Content from Getting Started with Nextflow

Last updated on 2024-12-04 | Edit this page

Estimated time: 40 minutes

Overview

Questions

- What is a workflow and what are workflow management systems?

- Why should I use a workflow management system?

- What is Nextflow?

- What are the main features of Nextflow?

- What are the main components of a Nextflow script?

- How do I run a Nextflow script?

Objectives

- Understand what a workflow management system is.

- Understand the benefits of using a workflow management system.

- Explain the benefits of using Nextflow as part of your bioinformatics workflow.

- Explain the components of a Nextflow script.

- Run a Nextflow script.

Workflows

Analysing data involves a sequence of tasks, including gathering, cleaning, and processing data. This sequence of tasks is called a workflow or a pipeline. These workflows typically require executing multiple software packages, sometimes running on different computing environments, such as a desktop or a compute cluster. Traditionally these workflows have been joined together in scripts using general purpose programming languages such as Bash or Python.

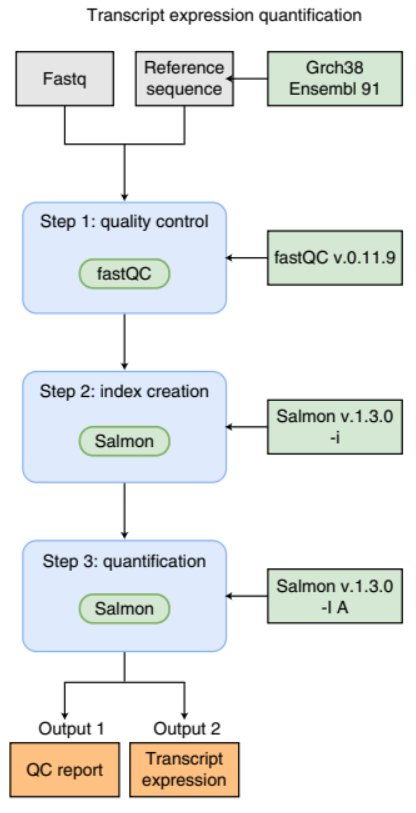

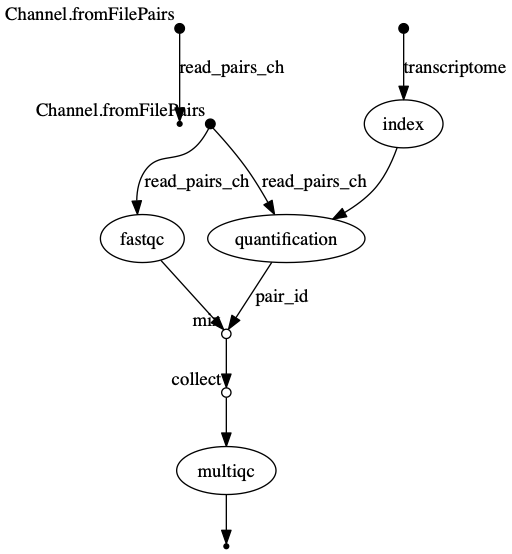

An example of a simple bioinformatics RNA-Seq pipeline.

However, as workflows become larger and more complex, the management of the programming logic and software becomes difficult.

Workflow management systems

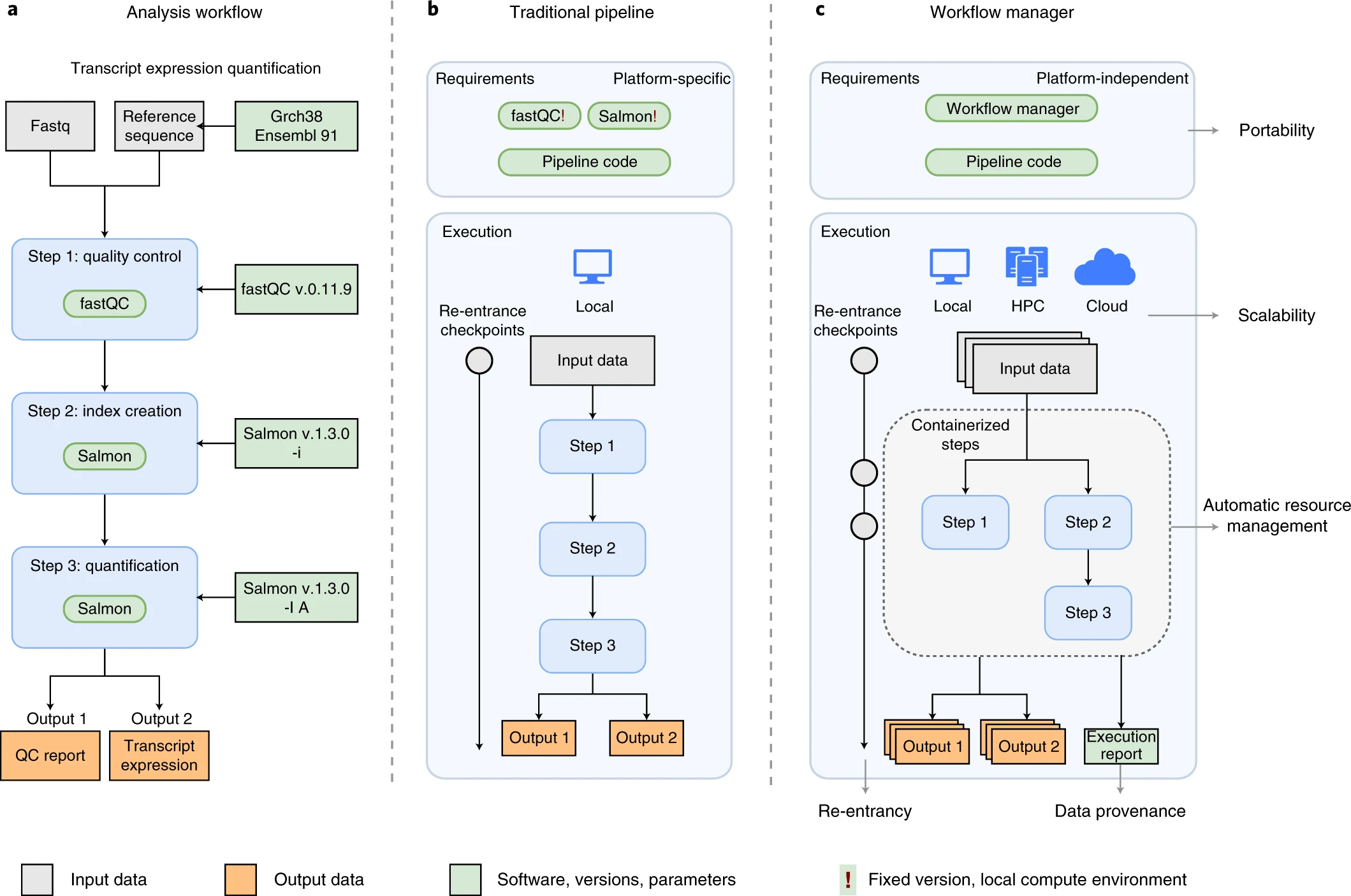

Workflow Management Systems (WfMS) such as Snakemake, Galaxy, and Nextflow have been developed specifically to manage computational data-analysis workflows in fields such as bioinformatics, imaging, physics, and chemistry. These systems contain multiple features that simplify the development, monitoring, execution and sharing of pipelines, such as:

- Run time management

- Software management

- Portability & Interoperability

- Reproducibility

- Re-entrancy

An example of differences between running a specific analysis workflow using a traditional pipeline or a WfMS-based pipeline. Source: Wratten, L., Wilm, A. & Göke, J. Reproducible, scalable, and shareable analysis pipelines with bioinformatics workflow managers. Nat Methods 18, 1161–1168 (2021). https://doi.org/10.1038/s41592-021-01254-9

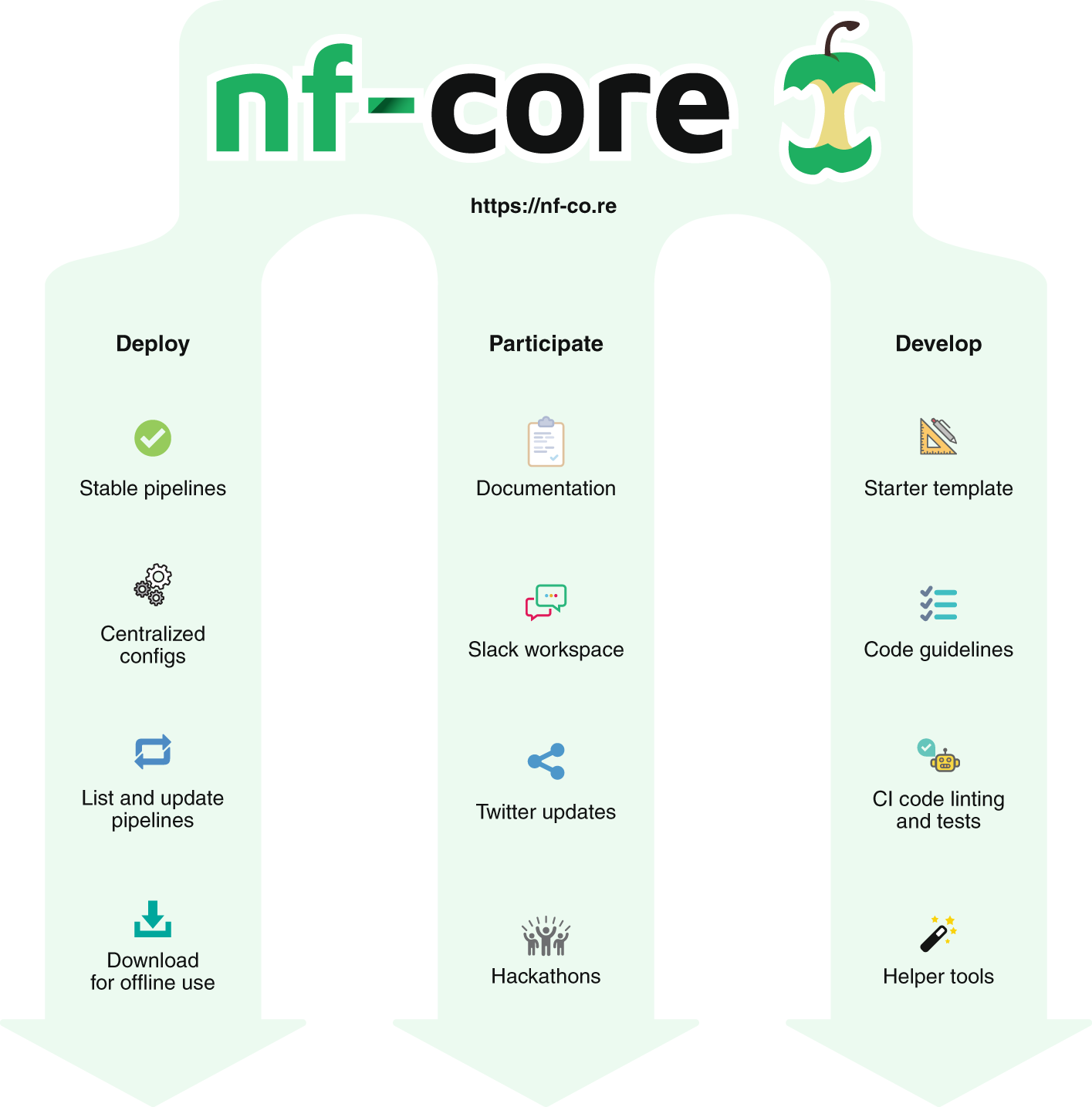

Nextflow core features

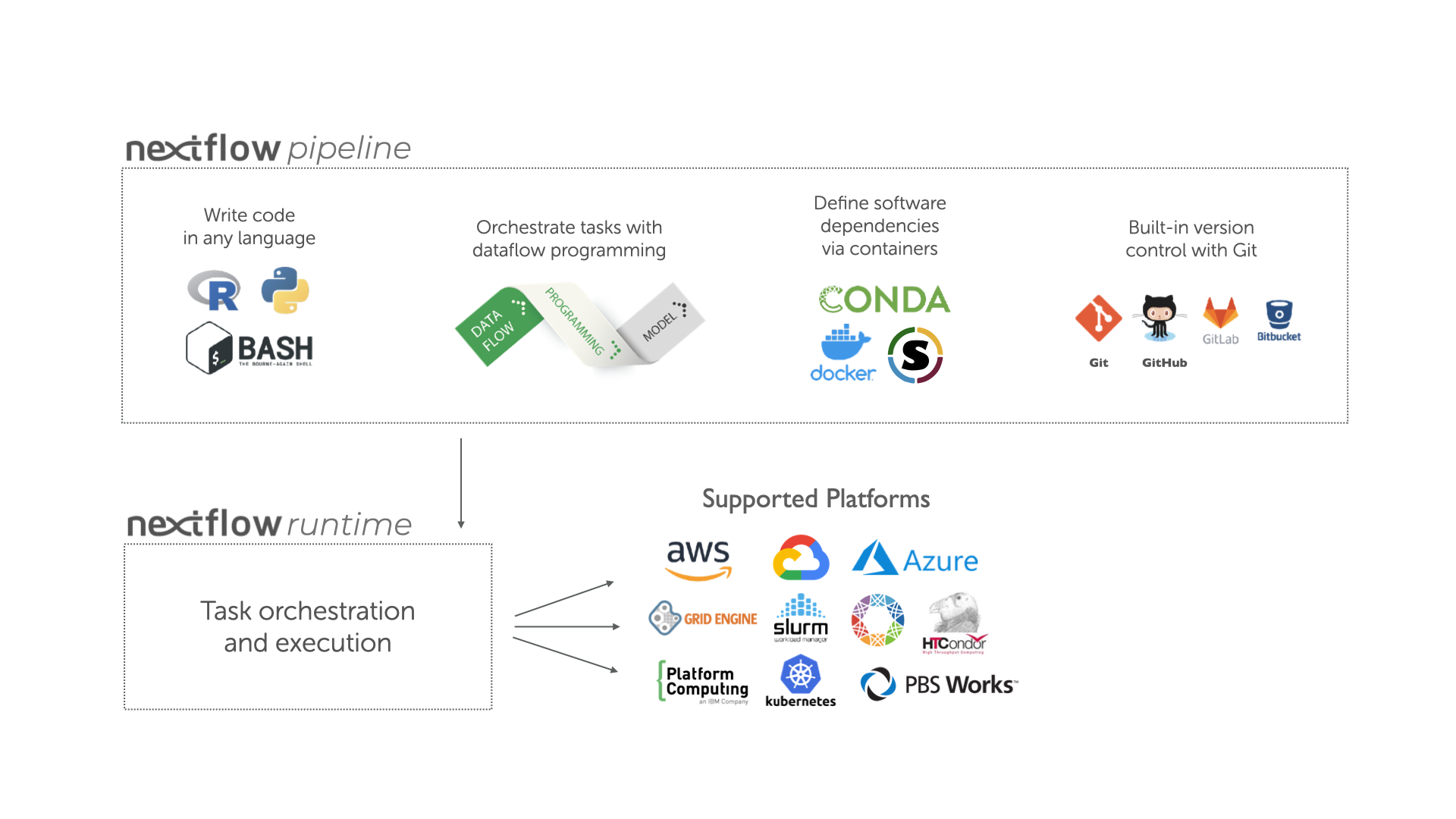

Overview of Nextflow core features.

Fast prototyping: A simple syntax for writing pipelines that enables you to reuse existing scripts and tools for fast prototyping.

Reproducibility: Nextflow supports several container technologies, such as Docker and Singularity, as well as the package manager Conda. This, along with the integration of the GitHub code sharing platform, allows you to write self-contained pipelines, manage versions and to reproduce any previous result when re-run, including on different computing platforms.

Portability & interoperability: Nextflow’s syntax separates the functional logic (the steps of the workflow) from the execution settings (how the workflow is executed). This allows the pipeline to be run on multiple platforms, e.g. local compute vs. a university compute cluster or a cloud service like AWS, without changing the steps of the workflow.

Simple parallelism: Nextflow is based on the dataflow programming model which greatly simplifies the splitting of tasks that can be run at the same time (parallelisation).

Continuous checkpoints & re-entrancy: All the intermediate results produced during the pipeline execution are automatically tracked. This allows you to resume its execution from the last successfully executed step, no matter what the reason was for it stopping.

Processes, channels, and workflows

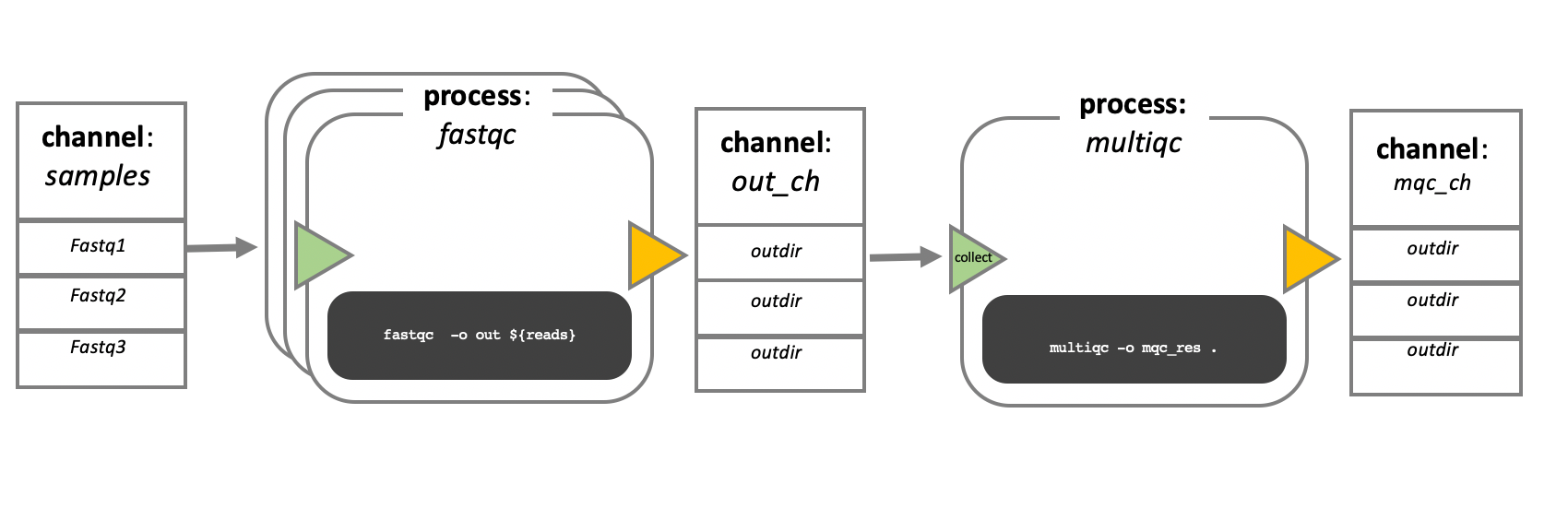

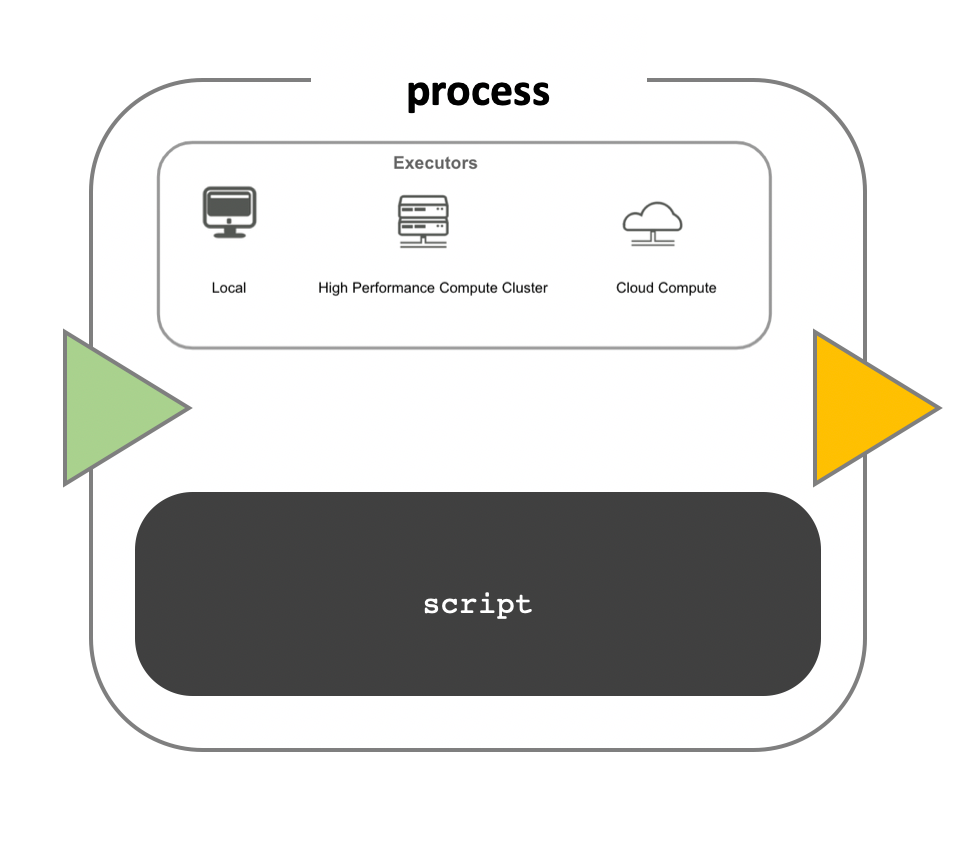

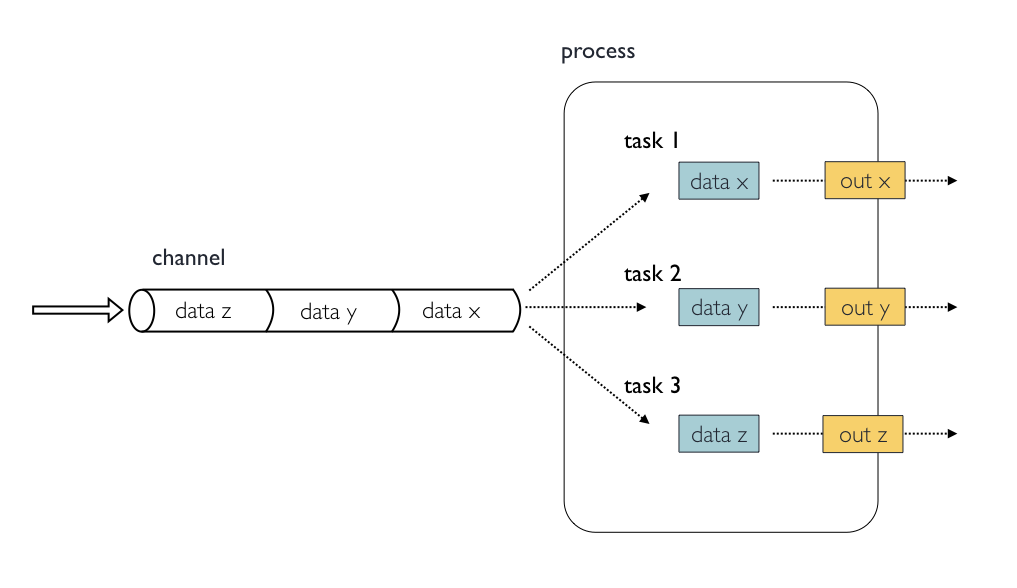

Nextflow workflows have three main parts: processes, channels, and workflows.

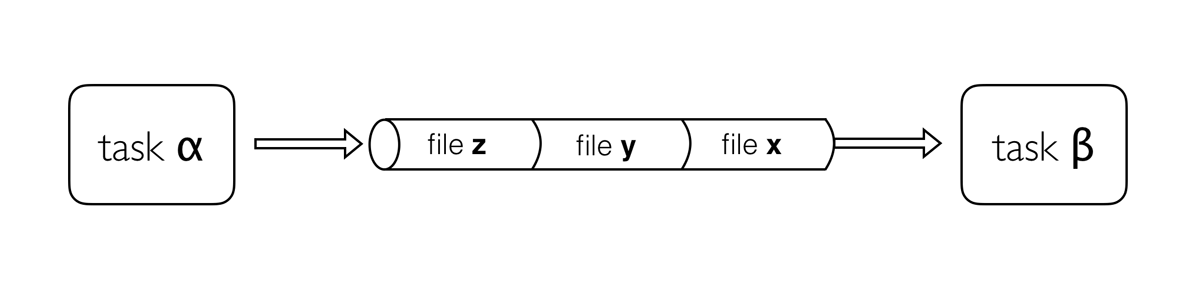

Processes describe a task to be run. A process script can be written in any scripting language that can be executed by the Linux platform (Bash, Perl, Ruby, Python, R, etc.). Processes spawn a task for each complete input set. Each task is executed independently and cannot interact with other tasks. The only way data can be passed between process tasks is via asynchronous queues, called channels.

Processes define inputs and outputs for a task. Channels are then used to manipulate the flow of data from one process to the next.

The interaction between processes, and ultimately the pipeline execution flow itself, is then explicitly defined in a workflow section.

In the following example we have a channel containing three elements, e.g., three data files. We have a process that takes the channel as input. Since the channel has three elements, three independent instances (tasks) of that process are run in parallel. Each task generates an output, which is passed to another channel and used as input for the next process.

Nextflow process flow diagram.

Workflow execution

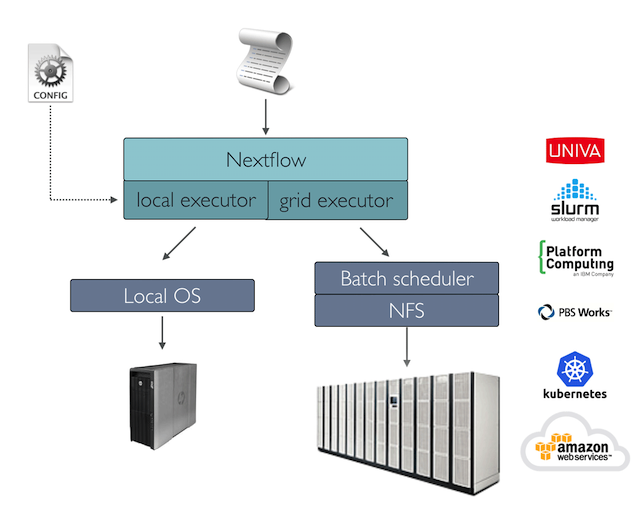

While a process defines what command or script has to be

executed, the executor determines how that script is

actually run in the target system.

If not otherwise specified, processes are executed on the local computer. The local executor is very useful for pipeline development, testing, and small-scale workflows, but for large-scale computational pipelines, a High Performance Cluster (HPC) or Cloud platform is often required.

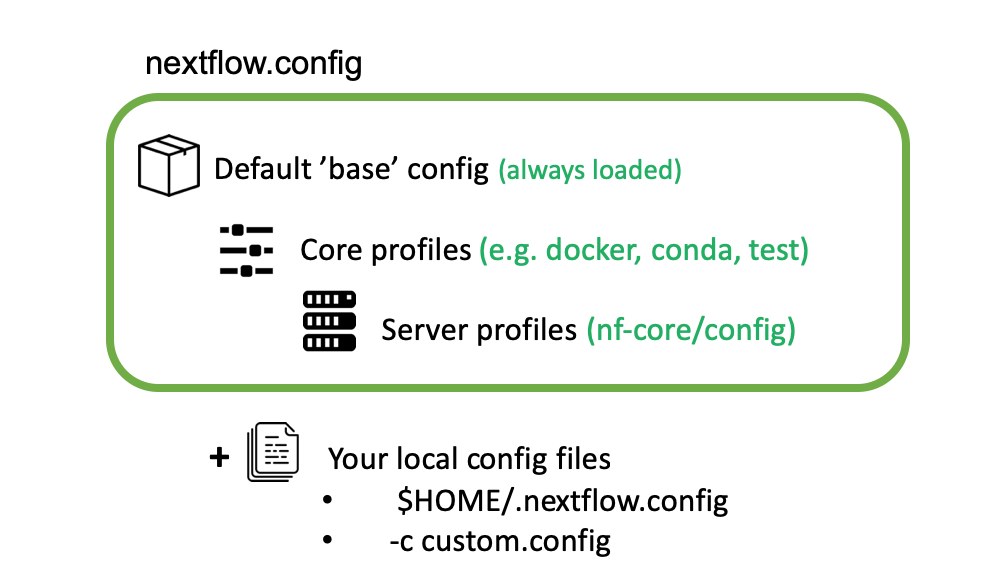

Nextflow Executors

Nextflow provides a separation between the pipeline’s functional logic and the underlying execution platform. This makes it possible to write a pipeline once, and then run it on your computer, compute cluster, or the cloud, without modifying the workflow, by defining the target execution platform in a configuration file.

Nextflow provides out-of-the-box support for major batch schedulers and cloud platforms such as Sun Grid Engine, SLURM job scheduler, AWS Batch service and Kubernetes; a full list can be found here.

Your first script

We are now going to look at a sample Nextflow script that counts the

number of lines in a file. Create the file word_count.nf in

the current directory using your favourite text editor and copy-paste

the following code:

The nextflow scripts for each lesson episode are available in the

scripts directory created during the course setup. You

should copy the script into the current directory

For example, to copy the script for this lesson episode, run the following command:

GROOVY

#!/usr/bin/env nextflow

/*

========================================================================================

Workflow parameters are written as params.<parameter>

and can be initialised using the `=` operator.

========================================================================================

*/

params.input = "data/yeast/reads/ref1_1.fq.gz"

/*

========================================================================================

Input data is received through channels

========================================================================================

*/

input_ch = Channel.fromPath(params.input)

/*

========================================================================================

Main Workflow

========================================================================================

*/

workflow {

// The script to execute is called by it's process name, and input is provided between brackets.

NUM_LINES(input_ch)

/* Process output is accessed using the `out` channel.

The channel operator view() is used to print process output to the terminal. */

NUM_LINES.out.view()

}

/*

========================================================================================

A Nextflow process block. Process names are written, by convention, in uppercase.

This convention is used to enhance workflow readability.

========================================================================================

*/

process NUM_LINES {

input:

path read

output:

stdout

script:

"""

# Print file name

printf '${read}\\t'

# Unzip file and count number of lines

gunzip -c ${read} | wc -l

"""

}This is a Nextflow script, which contains the following:

- An optional interpreter directive (“Shebang”) line, specifying the location of the Nextflow interpreter.

- A multi-line Nextflow comment, written using C style block comments, there are more comments later in the file.

- A pipeline parameter

params.inputwhich is given a default value, of the relative path to the location of a compressed fastq file, as a string. - A Nextflow channel

input_chused to read in data to the workflow. - An unnamed

workflowexecution block, which is the default workflow to run. - A call to the process

NUM_LINES. - An operation on the process output, using the channel operator

.view(). - A Nextflow process block named

NUM_LINES, which defines what the process does. - An

inputdefinition block that assigns theinputto the variableread, and declares that it should be interpreted as a file path. - An

outputdefinition block that uses the Linux/Unix standard output streamstdoutfrom the script block. - A script block that contains the bash commands

printf '${read}'andgunzip -c ${read} | wc -l.

Running Nextflow scripts

To run a Nextflow script use the command

nextflow run <script_name>.

You should see output similar to the text shown below:

OUTPUT

N E X T F L O W ~ version 21.04.3

Launching `word_count.nf` [fervent_babbage] - revision: c54a707593

executor > local (1)

[21/b259be] process > NUM_LINES (1) [100%] 1 of 1 ✔

ref1_1.fq.gz 58708- The first line shows the Nextflow version number.

- The second line shows the run name

fervent_babbage(adjective and scientist name) and revision idc54a707593. - The third line tells you the process has been executed locally

(

executor > local). - The next line shows the process id

21/b259be, process name, number of cpus, percentage task completion, and how many instances of the process have been run. - The final line is the output of the

.view()operator.

Quick recap

- A workflow is a sequence of tasks that process a set of data, and a workflow management system (WfMS) is a computational platform that provides an infrastructure for the set-up, execution and monitoring of workflows.

- Nextflow scripts comprise of channels for controlling inputs and outputs, and processes for defining workflow tasks.

- You run a Nextflow script using the

nextflow runcommand.

Key Points

- A workflow is a sequence of tasks that process a set of data.

- A workflow management system (WfMS) is a computational platform that provides an infrastructure for the set-up, execution and monitoring of workflows.

- Nextflow is a workflow management system that comprises both a runtime environment and a domain specific language (DSL).

- Nextflow scripts comprise of channels for controlling inputs and outputs, and processes for defining workflow tasks.

- You run a Nextflow script using the

nextflow runcommand.

Content from Workflow parameterisation

Last updated on 2024-12-04 | Edit this page

Estimated time: 25 minutes

Overview

Questions

- How can I change the data a workflow uses?

- How can I parameterise a workflow?

- How can I add my parameters to a file?

Objectives

- Use pipeline parameters to change the input to a workflow.

- Add a pipeline parameters to a Nextflow script.

- Understand how to create and use a parameter file.

In the first episode we ran the Nextflow script,

word_count.nf, from the command line and it counted the

number of lines in the file data/yeast/reads/ref1_1.fq.gz.

To change the input to script we can make use of pipeline

parameters.

Pipeline parameters

The Nextflow word_count.nf script defines a pipeline

parameter params.input. Pipeline parameters enable you to

change the input to the workflow at runtime, via the command line or a

configuration file, so they are not hard-coded into the script.

Pipeline parameters are declared in the workflow by prepending the

prefix params, separated by the dot character, to a

variable name e.g., params.input.

Their value can be specified on the command line by prefixing the

parameter name with a double dash character, e.g.,

--input.

In the script word_count.nf the pipeline parameter

params.input was specified with a value of

"data/yeast/reads/ref1_1.fq.gz".

To process a different file,

e.g. data/yeast/reads/ref2_2.fq.gz, in the

word_count.nf script we would run:

OUTPUT

N E X T F L O W ~ version 21.04.0

Launching `word_count.nf` [gigantic_woese] - revision: 8acb5cb9b0

executor > local (1)

[26/3cf986] process > NUM_LINES (1) [100%] 1 of 1 ✔

ref2_2.fq.gz 81720We can also use wild cards to specify multiple input files (This will

be covered in the channels episode). In the example below we use the

* to match any sequence of characters between

ref2_ and .fq.gz. Note: If

you use wild card characters on the command line you must enclose the

value in quotes.

This runs the process NUM_LINES twice, once for each file it matches.

OUTPUT

N E X T F L O W ~ version 21.04.0

Launching `word_count.nf` [tender_lumiere] - revision: 8acb5cb9b0

executor > local (2)

[cc/b6f793] process > NUM_LINES (1) [100%] 2 of 2 ✔

ref2_2.fq.gz 81720

ref2_1.fq.gz 81720Change a pipeline’s input using a parameter

Re-run the Nextflow script word_count.nf by changing the

pipeline input to all files in the directory

data/yeast/reads/ that begin with ref and end

with .fq.gz:

The string specified on the command line will override the default value of the parameter in the script. The output will look like this:

OUTPUT

N E X T F L O W ~ version 20.10.0

Launching `word_count.nf` [soggy_miescher] - revision: c54a707593

executor > local (6)

[d3/9ca185] process > NUM_LINES (2) [100%] 6 of 6 ✔

ref3_2.fq.gz 52592

ref2_2.fq.gz 81720

ref1_1.fq.gz 58708

ref1_2.fq.gz 58708

ref3_1.fq.gz 52592

ref2_1.fq.gz 81720Adding a parameter to a script

To add a pipeline parameter to a script prepend the prefix

params, separated by a dot character ., to a

variable name e.g., params.input.

Let’s make a copy of the word_count.nf script as

wc-params.nf and add a new input parameter.

To add a parameter sleep with the default value

2 to wc-params.nf we add the line:

Note: You should always add a sensible default value

to the pipeline parameter. We can use this parameter to add another step

to our NUM_LINES process.

This step, sleep ${params.sleep}, will add a delay for

the amount of time specified in the params.sleep variable,

by default 2 seconds. To access the value inside the script block we use

{variable_name} syntax

e.g. ${params.sleep}.

We can now change the sleep parameter from the command line, For Example:

Add a pipeline parameter

If you haven’t already make a copy of the word_count.nf

as wc-params.nf.

Add the param sleep with a default value of 2 below the

params.input line. Add the line

sleep ${params.sleep} in the process NUM_LINES

above the line printf ${read}.

Run the new script wc-params.nf changing the sleep input

time.

What input file would it run and why?

How would you get it to process all .fq.gz files in the

data/yeast/reads directory as well as changing the sleep

input to 1 second?

This would use 1 as a value of sleep parameter instead

of default value (which is 2) and run the pipeline. The input file would

be data/yeast/reads/ref1_1.fq.gz as this is the default. To

run all input files we could add the param

--input 'data/yeast/reads/*.fq.gz'

Parameter File

If we have many parameters to pass to a script it is best to create a

parameters file. Parameters can be stored in JSON format. JSON is a data

serialization language, that is a way of storing data objects and

structures, such as the params object in a file.

The -params-file option is used to pass the parameters

file to the script.

For example the file wc-params.json contains the

parameters sleep and input in JSON format.

Create a file called wc-params.json with the above

contents.

To run the wc-params.nf script using these parameters we

add the option -params-file and pass the file

wc-params.json:

OUTPUT

N E X T F L O W ~ version 21.04.0

Launching `wc-params.nf` [nostalgic_northcutt] - revision: 2f86c9ac7e

executor > local (2)

[b4/747eaa] process > NUM_LINES (1) [100%] 2 of 2 ✔

etoh60_1_2.fq.gz 87348

etoh60_1_1.fq.gz 87348Create and use a Parameter file.

Create a parameter file params.json for the Nextflow

file wc-params.nf, and run the Nextflow script using the

created parameter file, specifying:

- sleep as 10

- input as

data/yeast/reads/ref3_1.fq.gz

OUTPUT

N E X T F L O W

version 21.04.0 Launching `wc-params.nf` [small_wiles] - revision:

f5ef7b7a01 executor \> local (1) [f3/4fa480] process \> NUM_LINES

(1) [100%] 1 of 1 ✔ ref3_1.fq.gz 52592 Key Points

- Pipeline parameters are specified by prepending the prefix

paramsto a variable name, separated by dot character. - To specify a pipeline parameter on the command line for a Nextflow

run use

--variable_namesyntax. - You can add parameters to a JSON formatted file and pass them to the

script using option

-params-file.

Content from Channels

Last updated on 2024-12-04 | Edit this page

Estimated time: 40 minutes

Overview

Questions

- How do I move data around in Nextflow?

- How do I handle different types of input, e.g. files and parameters?

- How do I create a Nextflow channel?

- How can I use pattern matching to select input files?

- How do I change the way inputs are handled?

Objectives

- Understand how Nextflow manages data using channels.

- Understand the different types of Nextflow channels.

- Create a value and queue channel using channel factory methods.

- Select files as input based on a glob pattern.

- Edit channel factory arguments to alter how data is read in.

Channels

Earlier we learnt that channels are the way in which Nextflow sends

data around a workflow. Channels connect processes via their inputs and

outputs. Channels can store multiple items, such as files (e.g., fastq

files) or values. The number of items a channel stores determines how

many times a process will run using that channel as input.

Note: When the process runs using one item from the input

channel, we will call that run a task.

Why use Channels?

Channels are how Nextflow handles file management, allowing complex tasks to be split up, run in parallel, and reduces ‘admin’ required to get the right inputs to the right parts of the pipeline.

Channels are asynchronous, which means that outputs from a set of processes will not necessarily be produced in the same order as the corresponding inputs went in. However, the first element into a channel queue is the first out of the queue (First in - First out). This allows processes to run as soon as they receive input from a channel. Channels only send data in one direction, from a producer (a process/operator), to a consumer (another process/operator).

Channel types

Nextflow distinguishes between two different kinds of channels: queue channels and value channels.

Queue channel

Queue channels are a type of channel in which data is consumed (used up) to make input for a process/operator. Queue channels can be created in two ways:

- As the outputs of a process.

- Explicitly using channel factory methods such as Channel.of or Channel.fromPath.

Value channels

The second type of Nextflow channel is a value channel.

A value channel is bound to a single

value. A value channel can be used an unlimited number times since its

content is not consumed. This is also useful for processes that need to

reuse input from a channel, for example, a reference genome sequence

file that is required by multiple steps within a process, or by more

than one process.

Queue vs Value Channel.

What type of channel would you use to store the following?

- Multiple values.

- A list with one or more values.

- A single value.

- A queue channels is used to store multiple values.

- A value channel is used to store a single value, this can be a list with multiple values.

- A value channel is used to store a single value.

Creating Channels using Channel factories

Channel factories are used to explicitly create channels. In

programming, factory methods (functions) are a programming design

pattern used to create different types of objects (in this case,

different types of channels). They are implemented for things that

represent more generalised concepts, such as a Channel.

Channel factories are called using the

Channel.<method> syntax, and return a specific

instance of a Channel.

The value Channel factory

The value factory method is used to create a value

channel. Values are put inside parentheses () to assign

them to a channel.

For example:

GROOVY

ch1 = Channel.value( 'GRCh38' )

ch2 = Channel.value( ['chr1', 'chr2', 'chr3', 'chr4', 'chr5'] )

ch3 = Channel.value( ['chr1' : 248956422, 'chr2' : 242193529, 'chr3' : 198295559] )- Creates a value channel and binds a string to it.

- Creates a value channel and binds a list object to it that will be emitted as a single item.

- Creates a value channel and binds a map object to it that will be emitted as a single item.

The value method can only take 1 argument, however, this can be a single list or map containing several elements.

Reminder:

- A List

object can be defined by placing the values in square brackets

[]separated by a comma. - A Map

object is similar, but with

key:value pairsseparated by commas.

To view the contents of a value channel, use the view

operator. We will learn more about channel operators in a later

episode.

GROOVY

ch1 = Channel.value( 'GRCh38' )

ch2 = Channel.value( ['chr1', 'chr2', 'chr3', 'chr4', 'chr5'] )

ch3 = Channel.value( ['chr1' : 248956422, 'chr2' : 242193529, 'chr3' : 198295559] )

ch1.view()

ch2.view()

ch3.view()Each item in the channel is printed on a separate line.

OUTPUT

GRCh38

[chr1, chr2, chr3, chr4, chr5]

[chr1:248956422, chr2:242193529, chr3:198295559]Queue channel factory

Queue (consumable) channels can be created using the following channel factory methods.

Channel.ofChannel.fromListChannel.fromPathChannel.fromFilePairsChannel.fromSRA

The of Channel factory

When you want to create a channel containing multiple values you can

use the channel factory Channel.of. Channel.of

allows the creation of a queue channel with the values

specified as arguments, separated by a ,.

OUTPUT

chr1

chr3

chr5

chr7The first line in this example creates a variable

chromosome_ch. chromosome_ch is a queue

channel containing the four values specified as arguments in the

of method. The view operator will print one

line per item in a list. Therefore the view operator on the

second line will print four lines, one for each element in the

channel:

You can specify a range of numbers as a single argument using the

Groovy range operator ... This creates each value in the

range (including the start and end values) as a value in the channel.

The Groovy range operator can also produce ranges of dates, letters, or

time. More information on the range operator can be found here.

Arguments passed to the of method can be of varying

types e.g., combinations of numbers, strings, or objects. In the above

examples we have examples of both string and number data types.

Channel.from

You may see the method Channel.from in older nextflow

scripts. This performs a similar function but is now deprecated (no

longer used), and so Channel.of should be used instead.

Create a value and Queue and view Channel contents

- Create a Nextflow script file called

channel.nf. - Create a Value channel

ch_vlcontaining the String'GRCh38'. - Create a Queue channel

ch_qucontaining the values 1 to 4. - Use

.view()operator on the channel objects to view the contents of the channels. - Run the code using

The fromList Channel factory

You can use the Channel.fromList method to create a

queue channel from a list object.

GROOVY

aligner_list = ['salmon', 'kallisto']

aligner_ch = Channel.fromList(aligner_list)

aligner_ch.view()This would produce two lines.

OUTPUT

salmon

kallistoChannel.fromList vs Channel.of

In the above example, the channel has two elements. If you has used

the Channel.of(aligner_list) it would have contained only 1 element

[salmon, kallisto] and any operator or process using the

channel would run once.

Creating channels from a list

Write a Nextflow script that creates both a queue and

value channel for the list

Then print the contents of the channels using the view

operator. How many lines does the queue and value channel print?

Hint: Use the fromList() and

value() Channel factory methods.

GROOVY

ids = ['ERR908507', 'ERR908506', 'ERR908505']

queue_ch = Channel.fromList(ids)

value_ch = Channel.value(ids)

queue_ch.view()

value_ch.view()OUTPUT

N E X T F L O W ~ version 21.04.0

Launching `channel_fromList.nf` [wise_hodgkin] - revision: 22d76be151

ERR908507

ERR908506

ERR908505

[ERR908507, ERR908506, ERR908505]The queue channel queue_ch will print three lines.

The value channel value_ch will print one line.

The fromPath Channel factory

The previous channel factory methods dealt with sending general

values in a channel. A special channel factory method

fromPath is used when wanting to pass files.

The fromPath factory method creates a queue

channel containing one or more files matching a file path.

The file path (written as a quoted string) can be the location of a single file or a “glob pattern” that matches multiple files or directories.

The file path can be a relative path (path to the file from the

current directory), or an absolute path (path to the file from the

system root directory - starts with /).

The script below creates a queue channel with a single file as its content.

OUTPUT

data/yeast/reads/ref1_2.fq.gzYou can also use glob syntax to specify pattern-matching behaviour for files. A glob pattern is specified as a string and is matched against directory or file names.

- An asterisk,

*, matches any number of characters (including none). - Two asterisks,

**, works like * but will also search sub directories. This syntax is generally used for matching complete paths. - Braces

{}specify a collection of subpatterns. For example:{bam,bai}matches “bam” or “bai”

For example the script below uses the *.fq.gz pattern to

create a queue channel that contains as many items as there are files

with .fq.gz extension in the data/yeast/reads

folder.

OUTPUT

data/yeast/reads/ref1_2.fq.gz

data/yeast/reads/etoh60_3_2.fq.gz

data/yeast/reads/temp33_1_2.fq.gz

data/yeast/reads/temp33_2_1.fq.gz

data/yeast/reads/ref2_1.fq.gz

data/yeast/reads/temp33_3_1.fq.gz

[..truncated..]Note The pattern must contain at least a star wildcard character.

You can change the behaviour of Channel.fromPath method

by changing its options. A list of .fromPath options is

shown below.

Available fromPath options:

| Name | Description |

|---|---|

| glob | When true, the characters *, ?,

[] and {} are interpreted as glob wildcards,

otherwise they are treated as literal characters (default: true) |

| type | The type of file paths matched by the string, either

file, dir or any (default:

file) |

| hidden | When true, hidden files are included in the resulting paths (default: false) |

| maxDepth | Maximum number of directory levels to visit (default: no limit) |

| followLinks | When true, symbolic links are followed during directory tree traversal, otherwise they are managed as files (default: true) |

| relative | When true returned paths are relative to the top-most common directory (default: false) |

| checkIfExists | When true throws an exception if the specified path does not exist in the file system (default: false) |

We can change the default options for the fromPath

method to give an error if the file doesn’t exist using the

checkIfExists parameter. In Nextflow, method parameters are

separated by a , and parameter values specified with a

colon :.

If we execute a Nextflow script with the contents below, it will run and not produce an output, or an error message that the file does not exist. This is likely not what we want.

OUTPUT

N E X T F L O W ~ version 20.10.0

Launching `channels.nf` [scruffy_swartz] DSL2 - revision: 2c8f18ab48Add the argument checkIfExists with the value

true.

GROOVY

read_ch = Channel.fromPath( 'data/chicken/reads/*.fq.gz', checkIfExists: true )

read_ch.view()This will give an error as there is no data/chicken directory.

OUTPUT

N E X T F L O W ~ version 20.10.0

Launching `channels.nf` [intergalactic_mcclintock] - revision: d2c138894b

No files match pattern `*.fq.gz` at path: data/chicken/reads/Using Channel.fromPath

- Create a Nextflow script

channel_fromPath.nf - Use the

Channel.fromPathmethod to create a channel containing all files in thedata/yeast/directory, including the subdirectories. - Add the parameter to include any hidden files.

- Then print all file names using the

viewoperator.

Hint: You need two asterisks, i.e. **,

to search subdirectories.

OUTPUT

N E X T F L O W ~ version 21.04.0

Launching `channel_fromPath.nf` [reverent_mclean] - revision: cf02269bcb

data/yeast/samples.csv

data/yeast/reads/etoh60_3_2.fq.gz

data/yeast/reads/temp33_1_2.fq.gz

data/yeast/reads/temp33_2_1.fq.gz

[..truncated..]The fromFilePairs Channel factory

We have seen how to process files individually using

fromPath. In Bioinformatics we often want to process files

in pairs or larger groups, such as read pairs in sequencing.

For example is the data/yeast/reads directory we have

nine groups of read pairs.

| Sample group | read1 | read2 |

|---|---|---|

| ref1 | data/yeast/reads/ref1_1.fq.gz | data/yeast/reads/ref1_2.fq.gz |

| ref2 | data/yeast/reads/ref2_1.fq.gz | data/yeast/reads/ref2_2.fq.gz |

| ref3 | data/yeast/reads/ref3_1.fq.gz | data/yeast/reads/ref3_2.fq.gz |

| temp33_1 | data/yeast/reads/temp33_1_1.fq.gz | data/yeast/reads/temp33_1_2.fq.gz |

| temp33_2 | data/yeast/reads/temp33_2_1.fq.gz | data/yeast/reads/temp33_2_2.fq.gz |

| temp33_3 | data/yeast/reads/temp33_3_1.fq.gz | data/yeast/reads/temp33_3_2.fq.gz |

| etoh60_1 | data/yeast/reads/etoh60_1_1.fq.gz | data/yeast/reads/etoh60_1_2.fq.gz |

| etoh60_2 | data/yeast/reads/etoh60_2_1.fq.gz | data/yeast/reads/etoh60_2_2.fq.gz |

| etoh60_3 | data/yeast/reads/etoh60_3_1.fq.gz | data/yeast/reads/etoh60_3_2.fq.gz |

Nextflow provides a convenient Channel factory method for this common

bioinformatics use case. The fromFilePairs method creates a

queue channel containing a tuple for every set of files

matching a specific glob pattern (e.g.,

/path/to/*_{1,2}.fq.gz).

A tuple is a grouping of data, represented as a Groovy

List.

- The first element of the tuple emitted from

fromFilePairsis a string based on the shared part of the filenames (i.e., the*part of the glob pattern). - The second element is the list of files matching the remaining part

of the glob pattern (i.e., the

<string>_{1,2}.fq.gzpattern). This will include any files ending_1.fq.gzor_2.fq.gz.

OUTPUT

[etoh60_3, [data/yeast/reads/etoh60_3_1.fq.gz, data/yeast/reads/etoh60_3_2.fq.gz]]

[temp33_1, [data/yeast/reads/temp33_1_1.fq.gz, data/yeast/reads/temp33_1_2.fq.gz]]

[ref1, [data/yeast/reads/ref1_1.fq.gz, data/yeast/reads/ref1_2.fq.gz]]

[ref2, [data/yeast/reads/ref2_1.fq.gz, data/yeast/reads/ref2_2.fq.gz]]

[temp33_2, [data/yeast/reads/temp33_2_1.fq.gz, data/yeast/reads/temp33_2_2.fq.gz]]

[ref3, [data/yeast/reads/ref3_1.fq.gz, data/yeast/reads/ref3_2.fq.gz]]

[temp33_3, [data/yeast/reads/temp33_3_1.fq.gz, data/yeast/reads/temp33_3_2.fq.gz]]

[etoh60_1, [data/yeast/reads/etoh60_1_1.fq.gz, data/yeast/reads/etoh60_1_2.fq.gz]]

[etoh60_2, [data/yeast/reads/etoh60_2_1.fq.gz, data/yeast/reads/etoh60_2_2.fq.gz]]This will produce a queue channel, read_pair_ch ,

containing nine elements.

Each element is a tuple that has;

- string value (the file prefix matched, e.g

temp33_1) - and a list with the two files e,g.

[data/yeast/reads/temp33_1_1.fq.gz, data/yeast/reads/temp33_1_2.fq.gz].

The asterisk character *, matches any number of

characters (including none), and the {} braces specify a

collection of subpatterns. Therefore the *_{1,2}.fq.gz

pattern matches any file name ending in _1.fq.gz or

_2.fq.gz .

What if you want to capture more than a pair?

If you want to capture more than two files for a pattern you will

need to change the default size argument (the default value

is 2) to the number of expected matching files.

For example in the directory data/yeast/reads there are

six files with the prefix ref. If we want to group (create

a tuple) for all of these files we could write;

GROOVY

read_group_ch = Channel.fromFilePairs('data/yeast/reads/ref{1,2,3}*',size:6)

read_group_ch.view()The code above will create a queue channel containing one element. The element is a tuple of which contains a string value, that is the pattern ref, and a list of six files matching the pattern.

OUTPUT

[ref, [data/yeast/reads/ref1_1.fq.gz, data/yeast/reads/ref1_2.fq.gz, data/yeast/reads/ref2_1.fq.gz, data/yeast/reads/ref2_2.fq.gz, data/yeast/reads/ref3_1.fq.gz, data/yeast/reads/ref3_2.fq.gz]]See more information about the channel factory

fromFilePairs here

More complex patterns

If you need to match more complex patterns you should create a sample sheet specifying the files and create a channel from that. This will be covered in the operator episode.

Create a channel containing groups of files

- Create a Nextflow script file

channel_fromFilePairs.nf. - Use the

fromFilePairsmethod to create a channel containing three tuples. Each tuple will contain the pairs of fastq reads for the three temp33 samples in thedata/yeast/readsdirectory

OUTPUT

N E X T F L O W ~ version 21.04.0

Launching `channels.nf` [stupefied_lumiere] - revision: a3741edde2

[temp33_1, [data/yeast/reads/temp33_1_1.fq.gz, data/yeast/reads/temp33_1_2.fq.gz]]

[temp33_3, [data/yeast/reads/temp33_3_1.fq.gz, data/yeast/reads/temp33_3_2.fq.gz]]

[temp33_2, [data/yeast/reads/temp33_2_1.fq.gz, data/yeast/reads/temp33_2_2.fq.gz]]Key Points

- Channels must be used to import data into Nextflow.

- Nextflow has two different kinds of channels: queue channels and value channels.

- Data in value channels can be used multiple times in workflow.

- Data in queue channels are consumed when they are used by a process or an operator.

- Channel factory methods, such as

Channel.of, are used to create channels. - Channel factory methods have optional parameters e.g.,

checkIfExists, that can be used to alter the creation and behaviour of a channel.

Content from Processes

Last updated on 2024-12-04 | Edit this page

Estimated time: 45 minutes

Overview

Questions

- How do I run tasks/processes in Nextflow?

- How do I get data, files and values, into a processes?

Objectives

- Understand how Nextflow uses processes to execute tasks.

- Create a Nextflow process.

- Define inputs to a process.

Processes

We now know how to create and use Channels to send data around a workflow. We will now see how to run tasks within a workflow using processes.

A process is the way Nextflow executes commands you

would run on the command line or custom scripts.

A process can be thought of as a particular step in a workflow, e.g. an alignment step in RNA-seq analysis. Processes are independent of each other (don’t require any another process to execute) and can not communicate/write to each other. Data is passed between processes via input and output Channels.

For example, below is the command you would run to count the number of sequence records in a FASTA format file such as the yeast transcriptome:

FASTA format

FASTA format is a text-based format for representing either nucleotide sequences or peptide sequences. A sequence in FASTA format begins with a single-line description, followed by lines of sequence data. The description line is distinguished from the sequence data by a greater-than (“>”) symbol in the first column.

BASH

>YBR024W_mRNA cdna chromosome:R64-1-1:II:289445:290350:1 gene:YBR024W gene_biotype:protein_coding transcript_biotype:protein_coding gene_symbol:SCO2 description:Protein anchored to mitochondrial inner membrane; may have a redundant function with Sco1p in delivery of copper to cytochrome c oxidase; interacts with Cox2p; SCO2 has a paralog, SCO1, that arose from the whole genome duplication [Source:SGD;Acc:S000000228]

ATGTTGAATAGTTCAAGAAAATATGCTTGTCGTTCCCTATTCAGACAAGCGAACGTCTCA

ATAAAAGGACTCTTTTATAATGGAGGCGCATATCGAAGAGGGTTTTCAACGGGATGTTGTzgrep -c ‘^>’

The command

zgrep -c '^>' data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz

is used in Unix-like systems for a specific purpose: it counts the

number of sequences in a compressed FASTA file. The tool

zgrep combines the functionalities of ‘grep’ for pattern

searching and ‘gzip’ for handling compressed files. The -c

option modifies this command to count the occurrences of lines matching

the pattern, instead of displaying them. The pattern

'^>' is designed to find lines that start with ‘>’,

which in FASTA files, denotes the beginning of a new sequence. Thus,

this command efficiently counts how many sequences are contained within

the specified compressed FASTA file.

OUTPUT

6612Now we will show how to convert this into a simple Nextflow process.

Process definition

The process definition starts with keyword process,

followed by process name, in this case NUMSEQ, and finally

the process body delimited by curly brackets

{}. The process body must contain a string which represents

the command or, more generally, a script that is executed by it.

GROOVY

process NUMSEQ {

script:

"zgrep -c '^>' ${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz"

}This process would run once.

Implicit variables

We use the Nextflow implicit variable ${projectDir} to

specify the directory where the main script is located. This is

important as Nextflow scripts are executed in a separate working

directory. A full list of implicit variables can be found here

To add the process to a workflow add a workflow block,

and call the process like a function. We will learn more about the

workflow block in the workflow episode.

GROOVY

//process_01.nf

process NUMSEQ {

script:

"zgrep -c '^>' ${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz"

}

workflow {

//process is called like a function in the workflow block

NUMSEQ()

}We can now run the process:

Note We need to add the Nextflow run option

-process.debug to print the output to the terminal.

OUTPUT

N E X T F L O W ~ version 21.10.6

Launching `process_01.nf` [modest_pike] - revision: 3eaa812b17

executor > local (1)

[cd/eab1fd] process > NUMSEQ [100%] 1 of 1 ✔

6612GROOVY

process COUNT_BASES {

script:

"""

zgrep -v '^>' ${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz|tr -d '\n'|wc -m

"""

}

workflow {

COUNT_BASES()

}OUTPUT

N E X T F L O W ~ version 21.04.0

Launching `simple_process.nf`` [prickly_gilbert] - revision: 471a79c65c

executor > local (1)

[56/5e6001] process > COUNT_BASES [100%] 1 of 1 ✔

8772368Definition blocks

The previous example was a simple process with no

defined inputs and outputs that ran only once. To control inputs,

outputs and how a command is executed a process may contain five

definition blocks:

- directives - 0, 1, or more: allow the definition of optional settings that affect the execution of the current process e.g. the number of cpus a task uses and the amount of memory allocated.

- inputs - 0, 1, or more: Define the input dependencies, usually channels, which determines the number of times a process is executed.

- outputs - 0, 1, or more: Defines the output channels used by the process to send results/data produced by the process.

- when clause - optional: Allows you to define a condition that must be verified in order to execute the process.

- script block - required: A statement within quotes that defines the commands that are executed by the process to carry out its task.

The syntax is defined as follows:

Script

At minimum a process block must contain a script

block.

The script block is a String “statement” that defines

the command that is executed by the process to carry out its task. These

are normally the commands you would run on a terminal.

A process contains only one script block, and it must be

the last statement when the process contains input and

output declarations.

The script block can be a simple one line string in

quotes e.g.

GROOVY

process NUMSEQ {

script:

"zgrep -c '^>' ${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz"

}

workflow {

NUMSEQ()

}Or, for commands that span multiple lines you can encase the command

in triple quotes """.

For example:

GROOVY

//process_multi_line.nf

process NUMSEQ_CHR {

script:

"""

zgrep '^>' ${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz > ids.txt

zgrep -c '>YA' ids.txt

"""

}

workflow {

NUMSEQ_CHR()

}OUTPUT

N E X T F L O W ~ version 21.10.6

Launching `process_multi_line.nf` [focused_jang] - revision: e32caf0dcb

executor > local (1)

[90/6e38f4] process > NUMSEQ_CHR [100%] 1 of 1 ✔

118The following section on python is meant to be run by the instructor not the learners. It is meant to be a demonstration of the different ways to run a process. This can be skipped for time.

By default the process command is interpreted as a Bash script. However, any other scripting language can be used just simply starting the script with the corresponding Shebang declaration. For example:

GROOVY

//process_python.nf

process PROCESS_READS {

script:

"""

#!/usr/bin/env python

import gzip

reads = 0

bases = 0

with gzip.open('${projectDir}/data/yeast/reads/ref1_1.fq.gz', 'rb') as read:

for id in read:

seq = next(read)

reads += 1

bases += len(seq.strip())

next(read)

next(read)

print("reads", reads)

print("bases", bases)

"""

}

workflow {

PROCESS_READS()

}OUTPUT

N E X T F L O W ~ version 24.04.4

Launching `process_python.nf` [mad_montalcini] DSL2 - revision: ee25d49465

executor > local (1)

[b4/a100c3] PROCESS_READS [100%] 1 of 1 ✔

reads 14677

bases 1482377This allows the use of a different programming languages which may better fit a particular job. However, for large chunks of code it is suggested to save them into separate files and invoke them from the process script.

Associated scripts

Scripts such as the one in the example below,

process_reads.py, can be stored in a bin

folder at the same directory level as the Nextflow workflow script that

invokes them, and given execute permission. Nextflow will automatically

add this folder to the PATH environment variable. To invoke

the script in a Nextflow process, simply use its filename on its own

rather than invoking the interpreter e.g. process_reads.py

instead of python process_reads.py. Note

The script process_reads.py must be executable to run.

PYTHON

#!/usr/bin/env python

# process_reads.py

import gzip

import sys

reads = 0

bases = 0

with gzip.open(sys.argv[1], 'rb') as read:

for id in read:

seq = next(read)

reads += 1

bases += len(seq.strip())

next(read)

next(read)

print("reads", reads)

print("bases", bases)GROOVY

//process_python_script.nf

process PROCESS_READS {

script:

"""

process_reads.py ${projectDir}/data/yeast/reads/ref1_1.fq.gz

"""

}

workflow {

PROCESS_READS()

}OUTPUT

N E X T F L O W ~ version 23.10.1

Launching `pr.nf` [kickass_legentil] DSL2 - revision: 3b9eee1d47

executor > local (1)

[88/759311] process > PROCESS_READS [100%] 1 of 1 ✔

reads 14677

bases 1482377Associated scripts

Scripts such as the one in the example above,

process_reads.py, can be stored in a bin

folder at the same directory level as the Nextflow workflow script that

invokes them, and given execute permission. Nextflow will automatically

add this folder to the PATH environment variable. To invoke

the script in a Nextflow process, simply use its filename on its own

rather than invoking the interpreter e.g. process_reads.py

instead of python process_reads.py.

Script parameters

The command in the script block can be defined

dynamically using Nextflow variables e.g. ${projectDir}. To

reference a variable in the script block you can use the $

in front of the Nextflow variable name, and additionally you can add

{} around the variable name

e.g. ${projectDir}.

Variable substitutions

Similar to bash scripting Nextflow uses the $ character

to introduce variable substitutions. The variable name to be expanded

may be enclosed in braces {variable_name}, which are

optional but serve to protect the variable to be expanded from

characters immediately following it which could be interpreted as part

of the name. It is a good rule of thumb to always use the

{} syntax because it enhances readability and clarity,

ensures correct variable interpretation, and prevents potential syntax

errors in complex expressions.

In the example below the variable chr is set to the

value A at the top of the Nextflow script. The variable is referenced

using the $chr syntax within the multi-line string

statement in the script block. A Nextflow variable can be

used multiple times in the script block.

GROOVY

//process_script.nf

chr = "A"

process CHR_COUNT {

script:

"""

printf "Number of sequences for chromosome ${chr} :"

zgrep -c '>Y'${chr} ${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz

"""

}

workflow {

CHR_COUNT()

}In most cases we do not want to hard code parameter values. We saw in

the parameter episode the use of a special Nextflow variable

params that can be used to assign values from the command

line. You would do this by adding a key name to the params variable and

specifying a value, like params.keyname = value

In the example below we define the variable params.chr

with a default value of A in the Nextflow script.

GROOVY

//process_script_params.nf

params.chr = "A"

process CHR_COUNT {

script:

"""

printf 'Number of sequences for chromosome '${params.chr}':'

zgrep -c '^>Y'${params.chr} ${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz

"""

}

workflow {

CHR_COUNT()

}Remember, we can change the default value of chr to a

different value such as B, by running the Nextflow script

using the command below. Note: parameters to the

workflow have two hyphens --.

OUTPUT

N E X T F L O W ~ version 24.04.3

Launching `process_script_params.nf` [pedantic_mandelbrot] DSL2 - revision: 538e3c2b38

executor > local (1)

[19/6d96a0] process > CHR_COUNT [100%] 1 of 1 ✔

Number of sequences for chromosome B:456Script parameters

For the Nextflow script below.

GROOVY

//process_exercise_script_params.nf

process COUNT_BASES {

script:

"""

zgrep -v '^>' ${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz|grep -o A|wc -l

"""

}

workflow {

COUNT_BASES()

}Add a parameter params.base to the script and uses the

variable ${param.base} insides the script. Run the pipeline

using a base value of C using the --base

command line option.

Note: The Nextflow option

-process.debug will print the process’ stdout to the

terminal.

GROOVY

//process_exercise_script_params.nf

params.base='A'

process COUNT_BASES {

script:

"""

zgrep -v '^>' ${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz|grep -o ${params.base}|wc -l

"""

}

workflow {

COUNT_BASES()

}OUTPUT

N E X T F L O W ~ version 21.04.0

Launching `process_script_params.nf ` [nostalgic_jones] - revision: 9feb8de4fe

executor > local (1)

[92/cdc9de] process > COUNT_BASES [100%] 1 of 1 ✔

1677188Bash variables

Nextflow uses the same Bash syntax for variable substitutions,

$variable, in strings. However, Bash variables need to be

escaped using \ character in front of

\$variable name.

In the example below we will set a bash variable NUMIDS

then echo the value of NUMIDS in our script block.

GROOVY

//process_escape_bash.nf

process NUM_IDS {

script:

"""

#set bash variable NUMIDS

NUMIDS=`zgrep -c '^>' $params.transcriptome`

echo 'Number of sequences'

printf "%'d\n" \$NUMIDS

"""

}

params.transcriptome = "${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz"

workflow {

NUM_IDS()

}Shell

Another alternative is to use a shell block definition

instead of script. When using the shell

statement Bash variables are referenced in the normal way

$my_bash_variable; However, the shell

statement uses a different syntax for Nextflow variable substitutions:

!{nextflow_variable}, which is needed to use both Nextflow

and Bash variables in the same script.

For example in the script below that uses the shell

statement we reference the Nextflow variables as

!{projectDir} , and the Bash variable as

${NUMCHAR} and ${NUMLINES}.

GROOVY

//process_shell.nf

process NUM_IDS {

shell:

//Shell script definition requires the use of single-quote ' delimited strings

'''

#set bash variable NUMIDS

NUMIDS=`zgrep -c '^>' !{params.transcriptome}`

echo 'Number of sequences'

printf $NUMIDS

'''

}

params.transcriptome = "${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz"

workflow {

NUM_IDS()

}Conditional script execution

Sometimes you want to change how a process is run depending on some

condition. In Nextflow scripts we can use conditional statements such as

the if statement or any other expression evaluating to

boolean value true or false.

If statement

The if statement uses the same syntax common to other

programming languages such Java, C, JavaScript, etc.

GROOVY

if( < boolean expression > ) {

// true branch

}

else if ( < boolean expression > ) {

// true branch

}

else {

// false branch

}For example, the Nextflow script below will use the if

statement to change what the COUNT process counts depending on the

Nextflow variable params.method.

GROOVY

//process_conditional.nf

params.method = 'ids'

params.transcriptome = "$projectDir/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz"

process COUNT {

script:

if( params.method == 'ids' ) {

"""

echo Number of sequences in transciptome

zgrep -c "^>" $params.transcriptome

"""

}

else if( params.method == 'bases' ) {

"""

echo Number of bases in transciptome

zgrep -v "^>" $params.transcriptome|grep -o "."|wc -l

"""

}

else {

"""

echo Unknown method $params.method

"""

}

}

workflow {

COUNT()

}OUTPUT

N E X T F L O W ~ version 21.04.0

Launching `juggle_processes.nf` [cheeky_shirley] - revision: 588f20ae5a

[01/60b08d] process > COUNT [100%] 1 of 1 ✔

Number of sequences in transciptome

6612Inputs

Processes are isolated from each other but can communicate by sending

values and files via Nextflow channels from input and into

output blocks.

The input block defines which channels the process is

expecting to receive input from. The number of elements in input

channels determines the process dependencies and the number of times a

process executes.

You can only define one input block at a time and it must contain one or more input declarations.

The input block follows the syntax shown below:

The input qualifier declares the type of data to be received.

Input qualifiers

-

val: Lets you access the received input value by its name as a variable in the process script. -

env: Lets you use the input value to set an environment variable named as the specified input name. -

path: Lets you handle the received value as a file, staging the file properly in the execution context. -

stdin: Lets you forward the received value to the process stdin special file. -

tuple: Lets you handle a group of input values having one of the above qualifiers. -

each: Lets you execute the process for each entry in the input collection. A complete list of inputs can be found here.

Input values

The val qualifier allows you to receive value data as

input. It can be accessed in the process script by using the specified

input name, as shown in the following example:

GROOVY

//process_input_value.nf

process PRINTCHR {

input:

val chr

script:

"""

echo processing chromosome $chr

"""

}

chr_ch = Channel.of( 'A' .. 'P' )

workflow {

PRINTCHR(chr_ch)

}OUTPUT

N E X T F L O W ~ version 21.04.0

Launching `process_input_value.nf` [wise_kalman] - revision: 7f90e1bfc5

executor > local (24)

[b1/88df3f] process > PRINTCHR (16) [100%] 24 of 24 ✔

processing chromosome C

processing chromosome L

processing chromosome A

..truncated...In the above example the process is executed 16 times; each time a

value is received from the queue channel chr_ch it is used

to run the process.

Channel order

The channel guarantees that items are delivered in the same order as they have been sent, but since the process is executed in a parallel manner, there is no guarantee that they are processed in the same order as they are received.

Input files

When you need to handle files as input, you need the

path qualifier. Using the path qualifier means

that Nextflow will stage it in the process execution directory, and it

can be accessed in the script by using the name specified in the input

declaration.

The input file name can be defined dynamically by defining the input

name as a Nextflow variable and referenced in the script using the

$variable_name syntax.

For example, in the script below, we assign the variable name

read to the input files using the path

qualifier. The file is referenced using the variable substitution syntax

${read} in the script block:

GROOVY

//process_input_file.nf

process NUMLINES {

input:

path read

script:

"""

printf '${read} '

gunzip -c ${read} | wc -l

"""

}

reads_ch = Channel.fromPath( 'data/yeast/reads/ref*.fq.gz' )

workflow {

NUMLINES(reads_ch)

}OUTPUT

[cd/77af6d] process > NUMLINES (1) [100%] 6 of 6 ✔

ref1_1.fq.gz 58708

ref3_2.fq.gz 52592

ref2_2.fq.gz 81720

ref2_1.fq.gz 81720

ref3_1.fq.gz 52592

ref1_2.fq.gz 58708Callout

The input name can also be defined as a user-specified filename

inside quotes. For example, in the script below, the name of the file is

specified as 'sample.fq.gz' in the input definition and can

be referenced by that name in the script block.

GROOVY

//process_input_file_02.nf

process NUMLINES {

input:

path 'sample.fq.gz'

script:

"""

printf 'sample.fq.gz '

gunzip -c sample.fq.gz | wc -l

"""

}

reads_ch = Channel.fromPath( 'data/yeast/reads/ref*.fq.gz' )

workflow {

NUMLINES(reads_ch)

}OUTPUT

[d2/eb0e9d] process > NUMLINES (1) [100%] 6 of 6 ✔

sample.fq.gz 58708

sample.fq.gz 52592

sample.fq.gz 81720

sample.fq.gz 81720

sample.fq.gz 52592

sample.fq.gz 58708File Objects as inputs

When a process declares an input file, the corresponding channel

elements must be file objects, i.e. created with the path helper

function from the file specific channel factories,

e.g. Channel.fromPath or

Channel.fromFilePairs.

Add input channel

For the script process_exercise_input.nf:

- Define a Channel using

fromPathfor the transcriptomeparams.transcriptome.

- Add an input channel that takes the transcriptome channel as a file input.

- Replace

params.transcriptomein thescript:block with the input variable you defined in theinput:definition.

GROOVY

//process_exercise_input.nf

params.chr = "A"

params.transcriptome = "${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz"

process CHR_COUNT {

script:

"""

printf 'Number of sequences for chromosome '${params.chr}':'

zgrep -c '^>Y'${params.chr} ${params.transcriptome}

"""

}

workflow {

CHR_COUNT()

}Then run your script using

GROOVY

params.chr = "A"

params.transcriptome = "${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz"

process CHR_COUNT {

input:

path transcriptome

script:

"""

printf 'Number of sequences for chromosome '${params.chr}':'

zgrep -c '^>Y'${params.chr} ${transcriptome}

"""

}

transcriptome_ch = channel.fromPath(params.transcriptome)

workflow {

CHR_COUNT(transcriptome_ch)

}OUTPUT

N E X T F L O W ~ version 21.10.6

Launching `process_exercise_input.nf` [focused_jang] - revision: e32caf0dcb

executor > local (1)

[00/14ce67] process > CHR_COUNT (1) [100%] 1 of 1 ✔

Number of sequences for chromosome A:118Combining input channels

A key feature of processes is the ability to handle inputs from multiple channels. However, it’s important to understand how the number of items within the multiple channels affect the execution of a process.

Consider the following example:

GROOVY

//process_combine.nf

process COMBINE {

input:

val x

val y

script:

"""

echo $x and $y

"""

}

num_ch = Channel.of(1, 2, 3)

letters_ch = Channel.of('a', 'b', 'c')

workflow {

COMBINE(num_ch, letters_ch)

}Both channels contain three elements, therefore the process is executed three times, each time with a different pair:

OUTPUT

2 and b

1 and a

3 and cWhat is happening is that the process waits until it receives an input value from all the queue channels declared as input.

When this condition is verified, it uses up the input values coming from the respective queue channels, runs the task. This logic repeats until one or more queue channels have no more content. The process then stops.

What happens when not all channels have the same number of elements?

For example:

GROOVY

//process_combine_02.nf

process COMBINE {

input:

val x

val y

script:

"""

echo $x and $y

"""

}

ch_num = Channel.of(1, 2)

ch_letters = Channel.of('a', 'b', 'c', 'd')

workflow {

COMBINE(ch_num, ch_letters)

}In the above example the process is executed only two times, because when a queue channel has no more data to be processed it stops the process execution.

OUTPUT

2 and b

1 and aValue channels and process termination

Note however that value channels,

Channel.value, do not affect the process termination.

To better understand this behaviour compare the previous example with the following one:

GROOVY

//process_combine_03.nf

process COMBINE {

input:

val x

val y

script:

"""

echo $x and $y

"""

}

ch_num = Channel.value(1)

ch_letters = Channel.of('a', 'b', 'c')

workflow {

COMBINE(ch_num, ch_letters)

}In this example the process is run three times.

OUTPUT

1 and b

1 and a

1 and cCombining input channels

GROOVY

// process_exercise_combine_answer.nf

process COMBINE {

input:

path transcriptome

val chr

script:

"""

zgrep -c ">Y${chr}" ${transcriptome}

"""

}

transcriptome_ch = channel.fromPath('data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz', checkIfExists: true)

chr_ch = channel.of("A")

workflow {

COMBINE(transcriptome_ch, chr_ch)

}OUTPUT

N E X T F L O W ~ version 24.04.4

Launching `process_exercise_combine.nf` [fabulous_kare] DSL2 - revision: 1eade0a2e9

executor > local (1)

[e0/b05fe7] COMBINE (1) [100%] 1 of 1 ✔

118Input repeaters

We saw previously that by default the number of times a process runs

is defined by the queue channel with the fewest items. However, the

each qualifier allows you to repeat the execution of a

process for each item in a list or a queue channel, every time new data

is received.

For example if we can fix the previous example by using the input

qualifer each for the letters queue channel:

GROOVY

//process_repeat.nf

process COMBINE {

input:

val x

each y

script:

"""

echo $x and $y

"""

}

ch_num = Channel.of(1, 2)

ch_letters = Channel.of('a', 'b', 'c', 'd')

workflow {

COMBINE(ch_num, ch_letters)

}The process will run eight times.

OUTPUT

2 and d

1 and a

1 and c

2 and b

2 and c

1 and d

1 and b

2 and aInput repeaters

Extend the script process_exercise_repeat.nf by adding

more values to the chr queue channel e.g. A to P and

running the process for each value.

GROOVY

//process_exercise_repeat.nf

process COMBINE {

input:

path transcriptome

val chr

script:

"""

printf "Number of sequences for chromosome $chr: "

zgrep -c "^>Y${chr}" ${transcriptome}

"""

}

transcriptome_ch = channel.fromPath('data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz', checkIfExists: true)

chr_ch = channel.of('A')

workflow {

COMBINE(transcriptome_ch, chr_ch)

}How many times does this process run?

GROOVY

//process_exercise_repeat_answer.nf

process COMBINE {

input:

path transcriptome

each chr

script:

"""

printf "Number of sequences for chromosome $chr: "

zgrep -c "^>Y${chr}" ${transcriptome}

"""

}

transcriptome_ch = channel.fromPath('data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz', checkIfExists: true)

chr_ch = channel.of('A'..'P')

workflow {

COMBINE(transcriptome_ch, chr_ch)

}Then run the script.

This process runs 16 times.

OUTPUT

N E X T F L O W ~ version 24.04.4

Launching `process_exercise_repeat.nf` [ecstatic_turing] DSL2 - revision: 17891a7528

executor > local (16)

[65/389033] COMBINE (13) [ 62%] 10 of 16

executor > local (16)

[6d/f803e5] COMBINE (9) [100%] 16 of 16 ✔

Number of sequences for chromosome J: 398

Number of sequences for chromosome G: 583

Number of sequences for chromosome O: 597

Number of sequences for chromosome N: 435

Number of sequences for chromosome B: 456

Number of sequences for chromosome E: 323

executor > local (16)

[6d/f803e5] COMBINE (9) [100%] 16 of 16 ✔

Number of sequences for chromosome J: 398

Number of sequences for chromosome G: 583

Number of sequences for chromosome O: 597

Number of sequences for chromosome N: 435

Number of sequences for chromosome B: 456

Number of sequences for chromosome E: 323

Number of sequences for chromosome K: 348

Number of sequences for chromosome H: 321

Number of sequences for chromosome C: 186

Number of sequences for chromosome M: 505

Number of sequences for chromosome L: 580

Number of sequences for chromosome A: 118

Number of sequences for chromosome D: 836

Number of sequences for chromosome F: 140

Number of sequences for chromosome P: 513

Number of sequences for chromosome I: 245Key Points

- A Nextflow process is an independent step in a workflow.

- Processes contain up to five definition blocks including: directives, inputs, outputs, when clause and finally a script block.

- The script block contains the commands you would like to run.

- A process should have a script but the other four blocks are optional.

- Inputs are defined in the input block with a type qualifier and a name.

Content from Processes Part 2

Last updated on 2024-12-04 | Edit this page

Estimated time: 40 minutes

Overview

Questions

- How do I get data, files, and values, out of processes?

- How do I handle grouped input and output?

- How can I control when a process is executed?

- How do I control resources, such as number of CPUs and memory, available to processes?

- How do I save output/results from a process?

Objectives

- Define outputs to a process.

- Understand how to handle grouped input and output using the tuple qualifier.

- Understand how to use conditionals to control process execution.

- Use process directives to control execution of a process.

- Use the

publishDirdirective to save result files to a directory.

Outputs

We have seen how to input data into a process; now we will see how to output files and values from a process.

The output declaration block allows us to define the

channels used by the process to send out the files and values

produced.

An output block is not required, but if it is present it can contain one or more output declarations.

The output block follows the syntax shown below:

Output values

Like the input, the type of output data is defined using type qualifiers.

The val qualifier allows us to output a value defined in

the script.

Because Nextflow processes can only communicate through channels, if we want to share a value output of one process as input to another process, we would need to define that value in the output declaration block as shown in the following example:

GROOVY

//process_output_value.nf

params.transcriptome="${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz"

process COUNT_CHR_SEQS {

input:

val chr

output:

val chr

script:

"""

zgrep -c '^>Y'$chr $params.transcriptome

"""

}

// Both 'Channel' and 'channel' keywords work to generate channels.

// However, it is a good practice to be consistent through the whole pipeline development

chr_ch = channel.of('A'..'P')

workflow {

COUNT_CHR_SEQS(chr_ch)

// use the view operator to display contents of the channel

COUNT_CHR_SEQS.out.view()

}OUTPUT

N E X T F L O W ~ version 21.10.6

Launching `p1.nf` [jovial_lavoisier] - revision: a652ef75d4

executor > local (16)

executor > local (16)

[6a/d82669] process > COUNT_CHR_SEQS (16) [100%] 16 of 16 ✔

B

456

A

118

C

186

[..truncated..]

Output files

If we want to capture a file instead of a value as output we can use

the path qualifier that can capture one or more files

produced by the process, over the specified channel.

GROOVY

//process_output_file.nf

params.transcriptome="${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz"

process COUNT_CHR_SEQS {

input:

val chr

output:

path "${chr}_seq_count.txt"

script:

"""

zgrep -c '^>Y'$chr $params.transcriptome > ${chr}_seq_count.txt

"""

}

// Both 'Channel' and 'channel' keywords work to generate channels.

// However, it is a good practice to be consistent through the whole pipeline development

chr_ch = channel.of('A'..'P')

workflow {

COUNT_CHR_SEQS(chr_ch)

// use the view operator to display contents of the channel

COUNT_CHR_SEQS.out.view()

}OUTPUT

N E X T F L O W ~ version 21.10.6

Launching `process_output_file.nf` [angry_lichterman] - revision: 6a46c69413

executor > local (16)

[95/ec5d62] process > COUNT_CHR_SEQS (13) [100%] 16 of 16 ✔

/Users/ggrimes2/Documents/process_wf/work/f2/6d5c44985a15feb0555b7b71c37a9c/J_seq_count.txt

executor > local (16)

[95/ec5d62] process > COUNT_CHR_SEQS (13) [100%] 16 of 16 ✔

work/f2/6d5c44985a15feb0555b7b71c37a9c/J_seq_count.txt

work/4f/f810942341d003acc80c2603671177/B_seq_count.txt

work/23/883ccf187b5357137a9a87d98717c0/I_seq_count.txt

[..truncated..]In the above example the process COUNT_CHR_SEQS creates

a file named <chr>_seq_count.txt in the work

directory containing the number of transcripts within that

chromosome.

Since a file parameter using the same name,

<chr>_seq_count.txt, is declared in the output block,

when the task is completed that file is sent over the output

channel.

A downstream operator, such as .view or a

process declaring the same channel as input will be able to

receive it.

Multiple output files

When an output file name contains a * or ?

metacharacter it is interpreted as a pattern match. This allows us to

capture multiple files into a list and output them as a one item

channel.

For example, here we will capture the files

sequence_ids.txt and sequence.txt produced as

results from SPLIT_FASTA in the output channel.

GROOVY

//process_output_multiple.nf

params.transcriptome="${projectDir}/data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz"

process SPLIT_FASTA {

input:

path transcriptome

output:

path "*"

script:

"""

zgrep '^>' $transcriptome > sequence_ids.txt

zgrep -v '^>' $transcriptome > sequence.txt

"""

}

// Both 'Channel' and 'channel' keywords work to generate channels.

// However, it is a good practice to be consistent through the whole pipeline development

transcriptome_ch = channel.fromPath(params.transcriptome)

workflow {

SPLIT_FASTA(transcriptome_ch)

// use the view operator to display contents of the channel

SPLIT_FASTA.out.view()

}OUTPUT

N E X T F L O W ~ version 21.10.6

Launching `process_output_multiple.nf` [goofy_meitner] - revision: 53cbf7e5a4

executor > local (1)

[21/01e6ba] process > SPLIT_FASTA (1) [100%] 1 of 1 ✔

[/work/21/01e6baac41d2f37531f86dc7a57034/sequence.txt, work/21/01e6baac41d2f37531f86dc7a57034/sequence_ids.txt]

Note: There are some caveats on glob pattern behaviour:

- Input files are not included in the list of possible matches.

- Glob pattern matches against both files and directories path.

- When a two stars pattern

**is used to recurse through subdirectories, only file paths are matched i.e. directories are not included in the result list.

Output channels

Modify the nextflow script process_exercise_output.nf to

include an output block that captures the different output file

${chr}_seqids.txt.

GROOVY

//process_exercise_output.nf

process EXTRACT_IDS {

input:

path transcriptome

each chr

//add output block here to capture the file "${chr}_seqids.txt"

script:

"""

zgrep '^>Y'$chr $transcriptome > ${chr}_seqids.txt

"""

}

transcriptome_ch = channel.fromPath('data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz')

chr_ch = channel.of('A'..'P')

workflow {

EXTRACT_IDS(transcriptome_ch, chr_ch)

EXTRACT_IDS.out.view()

}GROOVY

//process_exercise_output_answer.nf

process EXTRACT_IDS {

input:

path transcriptome

each chr

//add output block here to capture the file "${chr}_seqids.txt"

output:

path "${chr}_seqids.txt"

script:

"""

zgrep '^>Y'$chr $transcriptome > ${chr}_seqids.txt

"""

}

transcriptome_ch = channel.fromPath('data/yeast/transcriptome/Saccharomyces_cerevisiae.R64-1-1.cdna.all.fa.gz')

chr_ch = channel.of('A'..'P')

workflow {

EXTRACT_IDS(transcriptome_ch, chr_ch)

EXTRACT_IDS.out.view()

}Grouped inputs and outputs

So far we have seen how to declare multiple input and output

channels, but each channel was handling only one value at time. However

Nextflow can handle groups of values using the tuple

qualifiers.

In tuples the first item is the grouping key and the second item is the list.

[group_key,[file1,file2,...]]When using channel containing a tuple, such a one created with

.filesFromPairs factory method, the corresponding input

declaration must be declared with a tuple qualifier,

followed by definition of each item in the tuple.

GROOVY

//process_tuple_input.nf

process TUPLEINPUT{

input:

tuple val(sample_id), path(reads)

script:

"""

echo $sample_id

echo $reads

"""

}

reads_ch = Channel.fromFilePairs('data/yeast/reads/ref1_{1,2}.fq.gz')

workflow {

TUPLEINPUT(reads_ch)

}outputs

OUTPUT

ref1

ref1_1.fq.gz ref1_2.fq.gzIn the same manner an output channel containing tuple of values can

be declared using the tuple qualifier following by the

definition of each tuple element in the tuple.

In the code snippet below the output channel would contain a tuple

with the grouping key value as the Nextflow variable

sample_id and a list containing the files matching the

following pattern "${sample_id}.fq.gz".

An example can be seen in this script below.

GROOVY

//process_tuple_io.nf

process COMBINE_FQ {

input:

tuple val(sample_id), path(reads)

output:

tuple val(sample_id), path("${sample_id}.fq.gz")

script:

"""

cat $reads > ${sample_id}.fq.gz

"""

}

reads_ch = Channel.fromFilePairs('data/yeast/reads/ref1_{1,2}.fq.gz')

workflow {

COMBINE_FQ(reads_ch)

COMBINE_FQ.out.view()

}The output is now a tuple containing the sample id and the combined fastq files.

OUTPUT

[ref1, work/2d/a073d34b5b3231b1f57872599bd308/ref1.fq]Composite inputs and outputs

Fill in the blank ___ input and output qualifiers for

process_exercise_tuple.nf. Note: the

output for the COMBINE_REPS process.